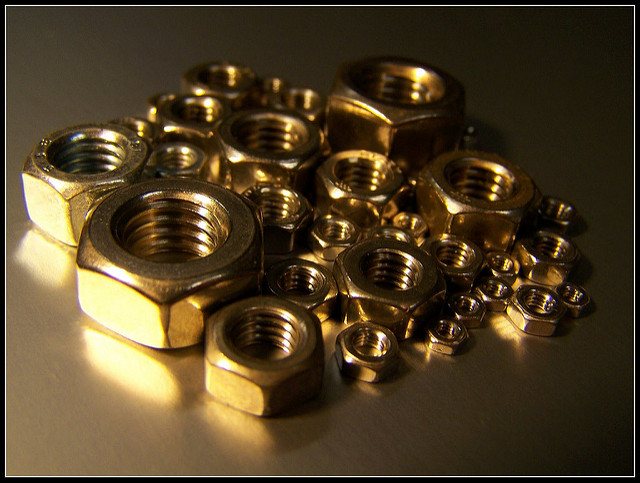

Recently concluded Bay Area DL School Organized by Andrew Ng, Samy Bengio, Pieter Abbeel and Shubho Sengupta was an excellent event to understand the state of art in Deep Learning and it’s various sub fields. At the end of day 1, Andrew Ng gave a talk on Nuts and Bolts of Deep Learning. This talk was more focussed on how to productionize deep learning models. This was a white board session with no slides. So this is an attempt at summarizing what I took away from that talk.

Summary of models

Today’s deep learning models can be divided into the following types:

- Fully connected/dense: These are traditional, general purpose deep learning models. They are characterized by a number of (usually greater than 3) hidden layers. All neurons in a layer are interconnected with every neuron in the next layer. Training methods include drop out etc.

- Image Models (Convolutional Neural Networks etc): These were started by Hinton and his team for image recognition. These networks have a number of convolutional layers followed by max pooling layers and some fully connected dense layers (usually at the end to generate outputs with softmax). These networks are exceptionally good at processing images or exploiting some local correlation.

- Sequence Models: These include LSTMs, GRUs, etc. Recently, Google’s seq2seq has been used with quite a lot of success.

- Other Models: A lot of work is going on in unsupervised learning, reinforcement learning etc. This is still in it’s infancy.

There is a concept of capacity of a neural network. A larger capacity network has more ability to represent larger part of the data. This capacity can be realized through number of parameters, layers, neurons etc that the model can process.

Today, most of the value at an enterprise value is being derived from the first three. However, Andrew felt that the future of AI and machine learning is in unsupervised and reinforcement learning.

Key Trends

A number of key trends and tips were laid out during the talk. Here is a summary of these:

Trend / Tip #1: General NN Outperform Other ML Techniques

Small neural network usually out performs traditional machine learning algorithms which require hand coding a lot of the feature/lot of feature engineering. Large data sets may make it very hard to hand engineer features. There is always the curse of dimensionality.

In case of large data sets, large NN would work much much better than the traditional machine learning method. In fact, deep learning method’s accuracy will improve with more data. Other methods’ accuracy plateaus usually after a point.

Trend / Tip #2: End to End Deep Learning

Until recently, most of the output from a network was numerical. Either a single number, or a vector of probabilities for multi-class classification or something similar. Now, we are seeing more complex outputs coming from the networks through use of auto-encoders, de-convolutional layers etc. Some examples include:

- Google Deep Dream: link Is a network that can generate new images, or even stylize current images/photos in style of a given painter

- Google seq2seq to generate email responses: Given an email, a seq2seq network can generate a short text block that could be used as a response. This is now implemented in Google’s inbox program. paper

- Captions or description of images: See this paper

- Generate images given caption: Given a caption, can an image be generated? This is a combination of image generation and caption examples above link

- Speech recognition: Traditional approach is to extract features from speech, like phonemes. Then, a network like HMM etc is used to recognize these phonemes into text. But, can this step be complete short circuited to go from input audio sample directly to text? This approach is doing quite well, especially at Baidu’s DeepSpeech model.

This is end to end Deep Learning. This is the key trend to watch as more and more systems get converted into this end-to-end scheme.

This needs a lot of labelled data to succeed. Access to enough labelled data is the biggest challenge in make end to end deep learning work.

SIDE BAR: Synthetic Data Generation

As noted above in end-to-end Deep Learning, having enough labelled data is a problem. In many cases, it is possible to hand engineer synthetic data to help with training of the network. Here are some strategies that can be used for generating synthetic data:

- Computer Vision: apply a set of filters and transforms to the existing images. These filters and transforms could include adding noise/speckles, warping certain parts of the image, etc. This would allow the network to learn on the additional noise and generalize better.

- Speech Recognition: It is possible to find audio samples of background noises from the internet. These are cafe noises, train station, road/intersection, office etc. If there are clean audio samples, then they can be combined with these background audio samples to generate many more. Further, transforms like reverb etc can be added to increase size of labelled set.

- Optical Character Recognition: Simplest flow is to pick a random image from the internet, pick a random font/size, and render text on top of the image. This synthesized image can be fed to the network for training.

Note: while this can help with data, make sure synthetic data does not alter the distribution of the expected test data. As an example, if you are generating images for OCR, then pay attention to color, saturation, brightness etc of the test set to make sure what is being generated is in the same space.

Trend / Tip #3: New Bias/Variance Tradeoff

Bias/Variance Tradeoff is a common topic/problem while tuning machine learning methods for better performance. In case of deep learning networks, this takes a completely different form.

More often than not, Deep Learning networks are comparing to human level performance. It is also common to have test and training set to be sampled from different distributions.

SIDE BAR: Training and test sets from different distributions

Consider building a new voice operated GPS. There may not be enough voice samples based on the grammar and commands. However, there may be a large audio sample of words spoken. This could be part of the training set. Further, you may record a number of people speaking the commands going to be built into the GPS system. This would be the test set primarily. Now, we have a case where test and training sets are coming for different distributions.

Consider a general workflow for bias/variance decision making when training and test samples that may come from different distributions as noted in the sidebar.

Consider the following sets that are constructed from the data:

- Training set

- Training-dev set: A cross validation set derived from the training set

- Test-dev set: A cross-validation set derived from the test set (which could potentially be a different source/distribution – see sidebar)

- Test set

Now measure the following errors across these sets:

- Human level error: This can be considered as a good surrogate of optimal learning rate or Bayes Rate.

- Training error

- Training dev set error

- Test dev set error

- Test set error

Decision Workflow

Step 1: If human error rate is significantly lower than training error, then :

- Build a bigger model

- Train the model longer

- Try a new model architecture

Step 2: If Step 1 passes, and now training dev set error is higher than training error, then try the following:

- Regularization of parameters

- Try to get more data to train on

- Try a new model architecture

Step 3: If step 2 is ok, but test dev set error is higher than training dev set error then:

- Synthesize more data (see side bar)

- Get more data

- Try a new model architecture

Step 4: If test error is more than test dev set error, then:

- Get more data

- Try a new model architecture

You can always take one of two simpler options: more data or bigger model. So there will be some way out if DL performance is stuck. In the previous world, you had trade bias off against variance (like regularization of parameters). But that is not true of DL.

Two Suggestions from Andrew for Your Management

1. Build a unified data warehouse. Discuss access control, not data warehouse

2. Co-locate High performance computing team with Deep Learning/AI team

What Can AI/Deep Learning Do – for Product Managers

As product managers think about where to apply latest advances in Deep learning, here are two simple rules of thumb:

- If a human can do it in less than 1 second, a deep learning network can do it

- Predict next event/outcome in a sequence of events

If your problem is in the above two areas, then it is probably a good candidate. However, it does not mean it is easy to build the requisite models.

How to Build Career in Machine Learning

A number of people ask Andrew this question – “What to do after the machine learning course in Coursera? Do I need a Ph. D.?”

There is the Saturday story. You should definitely read if you haven’t. In addition to this, he mentioned the following:

- How to be a good researcher:

- Read a lot of papers

- Try to replicate results published in the papers. A lot of ideas will be generated as a consequence

- Do the dirty work: of data collection, munging, coding etc along with the model development. But don’t only do dirty work

NOTE: I tried to fill in many things that Andrew said with my own understanding. If there is a confusion or error, most certainly it is in my understanding.

Image Credit: https://www.flickr.com/photos/martinofranchi/3222341620/in/photolist-5UKkU9-dswt16-8Rda9C-jUUKN-pZh7LS-9wZu8C-8QkXM1-ezcie3-iXdDn-k7M2vc-9wZt2q-k7PT3U-rQLjW1-axjqx9-3w1V9-k7Mjj2-9khRNT-72QBTS-dkEZVZ-8RdbhW-fuXTn4-e5jNA6-72QCnS-dJgCaf-k7Mitz-6CkM4M-65eBZN-72LCMH-k7Mevz-9khQ6F-dMaT4e-k7MNnp-9kkSpQ-yPSBj-72LCqx-aerDQF-k7MR1v-9khTcz-9kkMJE-9jH3Zp-5Ucqid-8Y6Xrj-9kkSNf-k7MaGn-dswDcm-72QCYu-9AzrkE-mYeYZ-bvMgNT-9khKmR